Decrypto

Crypto AKA "How to Lose Friends & Alienate People"

ML 4 Trading

When I started my MS program I was interested in understanding more about the difference between AI and ML. I took a course on classical AI algorithms, and one on ML algorithms for financial trading. The latter course I just took a real liking to, and I used what I learned to make a bunch of cool tools after it ended.

The Project

We talked about Q Learning and other algorithms for policy based reinforcement learners in that course. I thought the underlying mental model was pretty slick, and even if I didn’t use the exact Q algorithm I wanted to build systems that could make automated financial trades on my behalf with custom made AI. Decrypto was a small endeavour I started with a few former students of mine, both to give us all a real side project and to learn more about data science and ML. It consisted of quite a few sub components:

- A tool I built to both scrape and utilize the API of websites like CoinMarketCap for historical data on crypto prices (Decrypto Data Services). It also was responsible for cleaning, transformation, and local export of any fetched data.

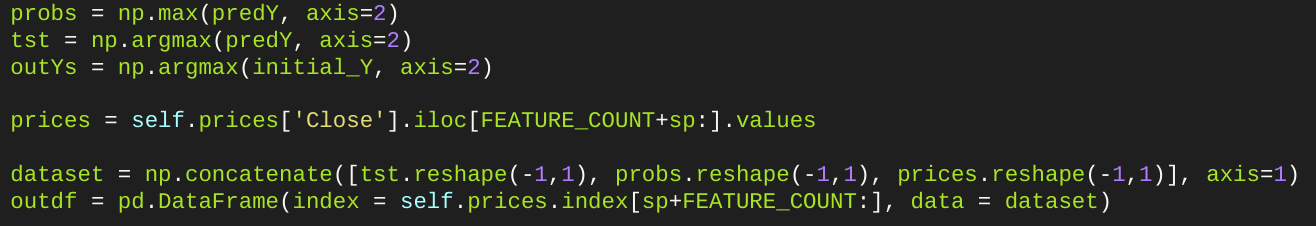

- A small ML library I built to implement a few different algorithms for ingesting the fetched data and constructing models (Decrypto Decision Services). I had an XGBoost Decision Tree learner, a classical Q learner, and a convolutional neural network algorithm built behind a layer that made it easy to train, test, export, and backtest models.

- A backend layer based on Django that was responsible for online ingestion of data using the Data Service; and to allow for API interaction for programmatic trading with my chosen exchange platform (Decrypto AutoBuyer).

- A frontend layer built by my students, that allowed for killing the trading bot manually in adverse events, and for charting the performance of the bot over time (Decrypto Frontend).

The Result

The frontend was excellent. My students did a really great job at making something simple and functional using React, that we could expand as necessary to improve charting capabilities or display model backtests.

The backend layer itself was also quite easy to write, buying services were easy to implement on most exchanges and we really only had to be careful to avoid the usual problem of accidentally committing API secrets to github. We wired up some test accounts and we were able to simulate receiving decisions from the model and executing small trades before we launched.

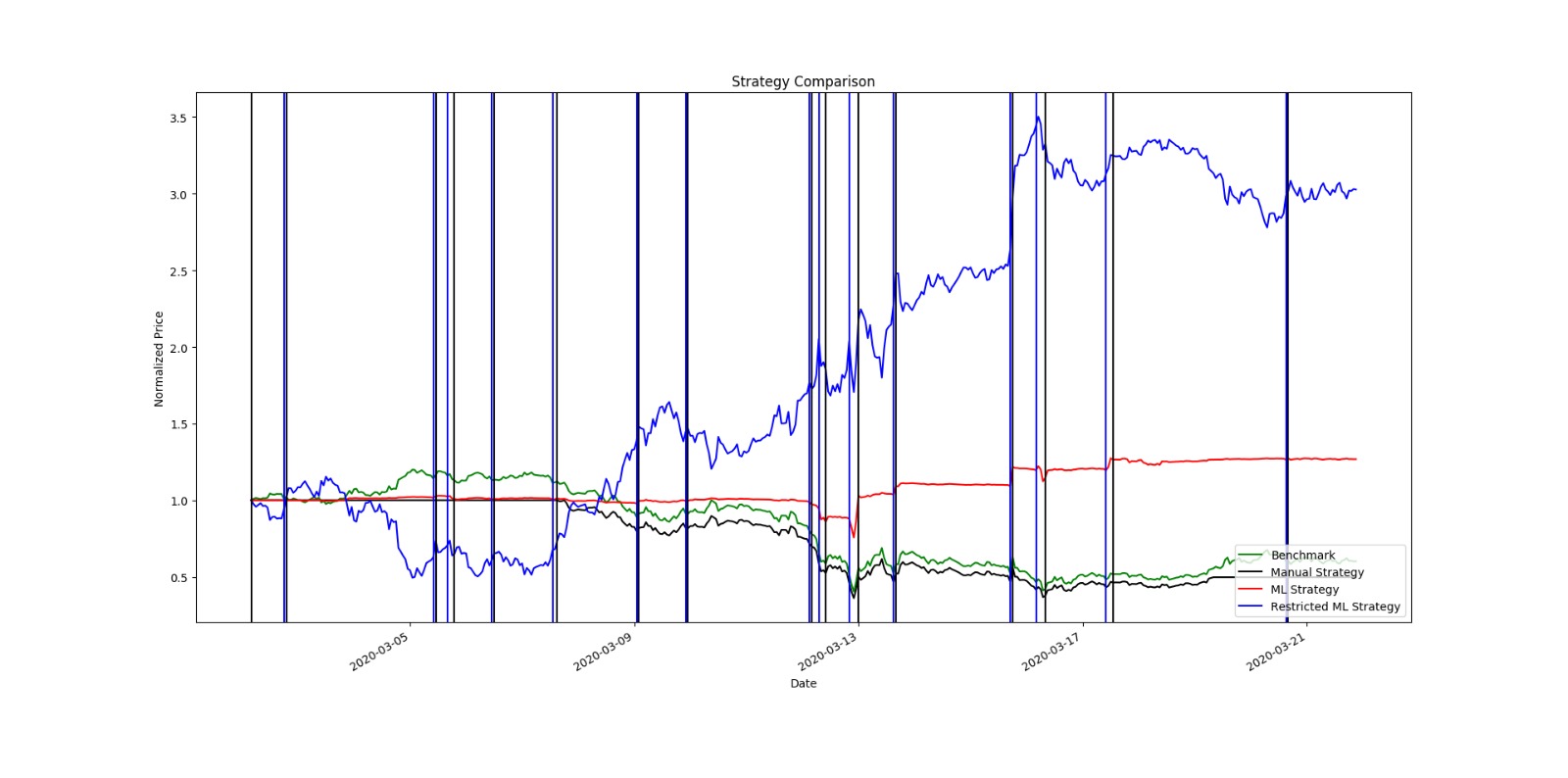

Of course, what was harder overall was the model layer. Writing our own backtesting code was not the worst endeavour, given that I did it a lot for my MS program course - but backtesting, no matter how carefully applied, can often “write a check you couldn’t actually cash.” It’s important to maintain skepticism about performance and go for smaller reliable gains than to try and make big bets, especially at inception.

We let it run for about a month and a half on Azure cloud, and I think it did quite well. We lost about $100 overall but the model did not come close to total loss and we were never really worried about it. We did have to use the frontend to terminate the trader a few times, but that was mostly because of simple mistakes like API errors when actually placing orders.

Insights

In no particular order, here are some of the things I learned from this project:

- If I had to start over I would have architected this a lot more robustly. I like the domain boundaries I had set but I think I would write the implementation with more attention to proper OOP design. A lot of the model plumbing would have been much easier if I spent time properly allowing for each algorithm to be substituted out for another.

- Models are fun but I’d wished I had more practical experience with best practices on how to keep them updated. Losses were mostly from periods where we rolled the training data forward in time to inform the RL policies, I probably was missing some basic experience with online / live ML work.

- I would have also started with admin features for the frontend early on. I have learned over time to optimize for a good development experience, and I think that investing time into observation and admin tools would have much bolstered the safety and speed of the project.

- Testing is essential, what problems we had, we codified in pytests so they didn’t reoccur. We also learned early on to test the models as best as we could for stable predictions.